What went right?

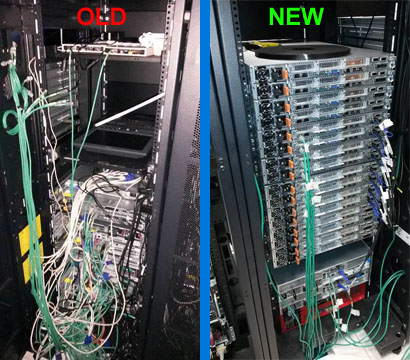

- The HPC rack was neatened up. This involved moving and consolidating servers, making space for PDU's and removing redundant cables that were impeding airflow. New HPC servers were installed. This task took two entire days as other IT equipment had to be moved as well. The picture below gives some idea of the work that was done.

- All HPC head nodes and worker nodes were patched up to the latest levels.

- Hex worker nodes now all have infiniband cables and consistent level of MPI software.

What went wrong?

- HPC P2V issues - As the BL20 blades are being decommissioned we had to virtualize the HPC head node. This took much longer than anticipated. VMWare's P2V app doesn't work quite as advertised and we had to implement some cunning workarounds to get the virtual machine to boot. This required recreating the image on a desktop first and doing a DD copy, hence the time delay. Hopefully we'll never need to do something like this again.

- Cloud series switch related problems - One of the 10GB switches in the Unicloud rack had issues so we dropped the CLOUD series servers for a day. This has been rectified.

- Hex MPI issues - Part of the OS patching required an upgrade of the infiniband drivers and hence the infiniband openMPI software. This overwrote a configuration file which meant that MPI jobs decided to ignore the scheduler's core level distribution and start distributing each user's jobs from core zero, leading to contention and slowness. This has now been fixed.

What new HPC servers?

We have doubled up on our 600 series and will be installing another 8 servers in the next months as power and cooling in the data centre allow. We are also investigating the purchase of another GPU server and possibly upgrading the GPU cards, although this is tentative. Numerous Grid serversservices were decommissioned in preparation for our EMI upgrade. More on this in a later post though.

HPC rack being prepared. New 600 series blades still being cabled and a space waiting for a new GPU server hopefully.