The disk cluster is configured and running. Four Dell R740DX servers each with a combination of twelve 10TB NLSAS and two 240GB SSD drives are providing a 345TB scratch volume, an 8TB /home volume and a 4TB software volume. Each server also has two 120GB SSD drives for the OS. The storage and compute nodes (Dell C6420s) are all connected at 100Gb/s via an EDR MSB7800 Mellanox switch.

Each storage target is natively configured with RAID6 providing redundancy in that we can lose two drives simultaneously at the cost of write speed and rebuild performance. We decided to use RAID6 as opposed to RAID10 as we will only be backing up data on the /home and software volumes, not scratch. A nice feature of Dell’s R740XD is the ability to hot swap drives from any of the three RAID sets, front or back.

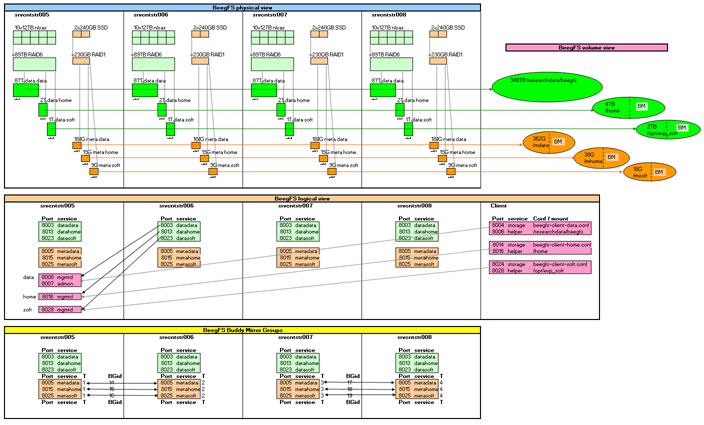

The storage servers also run meta data services which manage the striping and placement of files on the storage disks. Having multiple meta data services decreases the read\write time for file access. The meta data is stored on mirrored SSD drives for additional speed. As RAID1 is less reliable than RAID6 the meta data services are buddy mirrored which means we can lose an entire meta data RAID set and the service will automatically fail over to the second server.

BeegFS is tricky to set up, especially in multi-mode and requires a great deal of planning. Multi mode allows us to run several BeegFS clusters in one environment which means we can provision separate disk sets and quotas depending on the storage requirements. Below is the logical planning diagram of our multi-mode services.

We are running the latest version of BeegFS which uses systemd to manage the multi mode services (with the exception of the clients which still use init scripts). Version 7 also uses its own libbeegfs-ib package to build and manage the RDMA client services.

It’s critical to adjust and fine tune BeegFS, especially if one is using a non standard interconnect. A few small file system config adjustment took our native write speed from 15Gb/s to 18Gb/s.

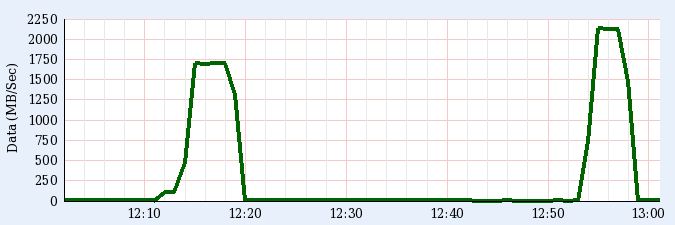

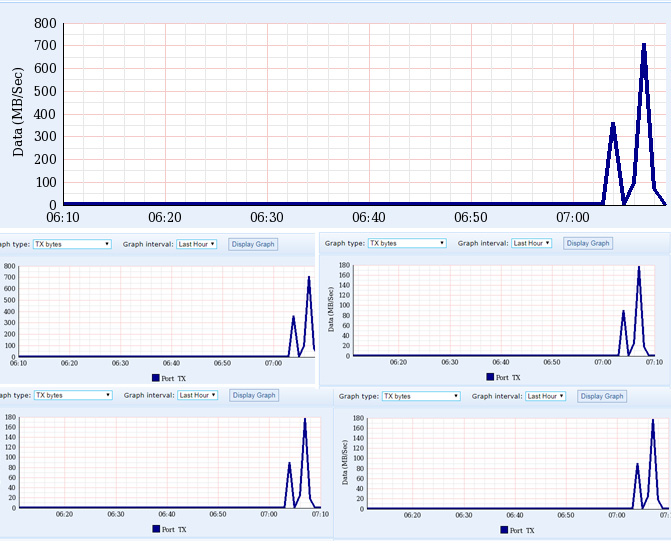

An example of disk write and striping is given below. A user is copying a 52GB file from one BeegFS disk to another. The overall write speed from the compute node is 5.6GB/s and this is load balanced across all storage servers at approximately 1.4GB/s which is approaching the 12Gb/s limit of the RAID controller in each server. Keep in mind that the file is being read from the storage targets at the same time so the combined RW speed is actually 11+GB/s. Yes, that’s a capital B.

Astute readers will have noted that it is very unlikely that with only 4 targets that the switch will be saturated, given it is capable of 100Gb/s, however the interconnect will also be used for MPI inter-process communication of parallel jobs.